Google Code Jam Archive — Virtual World Finals 2020 problems

Overview

We know the Code Jam 2020 World Finals were a different experience, but the problems themselves were as challenging as usual! The first one, Pack the Slopes, was worth fewer points and could be solved with the right applications of some evergreen techniques. The other four were all worth the same (larger) amount of points, so contestants had to make tactical decisions based on their own strengths and preferences. Would they try the insight-based Adjacent and Consecutive, the implementation-heavy Hexacoin Jam, the tricky geometric Musical Cords, or the elegantly satisfying graph-based Replace All? Also, which Test Set 1s were the lowest-hanging fruit?

Only 13 minutes in, Benq submitted the first correct full solution, which was for Pack the Slopes. After that, it took another hour for one of the other problems to be solved (Replace All, by ecnerwala and then yutaka1999). ecnerwala was the first to break the 100 point barrier, around two and a half hours into the contest, and ksun48 reached the same point total about 15 minutes later.

The top of the board was quiet for a while, but then at 3:11, Gennady.Korotkevich made several submissions and leapt to 173 points, lacking only the second test set of Musical Cords. The other contestants didn't know the true results, of course, and there was a chance that someone could have overtaken him... but nobody did, and he became our seven-time Code Jam champion! What an impressive streak — our hats are off to you. In second, third, and fourth, all with 131 points, were ksun48 (3:30:36), ecnerwala (3:55:58), and scottwu (4:13:58) — also excellent performances!

We hope that Code Jam 2020 provided you with the usual joy of solving algorithmic problems, and we especially appreciate your participation this time around. We look forward to welcoming everyone back again in 2021, and until then, you can keep your skills sharp on our platform with Kick Start. All of us on the team hope that you and your loved ones are safe and healthy.

Cast

Pack the Slopes: Written by Max Ward. Prepared by Darcy Best and Max Ward.

Adjacent and Consecutive: Written by Pablo Heiber. Prepared by Mohamed Yosri Ahmed, Timothy Buzzelli, and Jonathan Irvin Gunawan.

Hexacoin Jam: Written by Darcy Best. Prepared by Mohamed Yosri Ahmed and Pablo Heiber.

Musical Cords: Written by Max Ward. Prepared by the 🐱 Peppurr Fan Squad 🐱: Darcy Best, Timothy Buzzelli, and Max Ward.

Replace All: Written by Pablo Heiber. Prepared by John Dethridge.

Solutions and other problem preparation and review by Mohamed Yosri Ahmed, Sadia Atique, Liang Bai, Tomi Belan, Darcy Best, Timothy Buzzelli, Chun-Sung Ferng, Jonathan Irvin Gunawan, Md Mahbubul Hasan, Pablo Heiber, Ikumi Hide, Yui Hosaka, Artem Iglikov, Gabriel-Robert Inelus, ChanMin Kim, Petr Mitrichev, Kimiyuki Onaka, Archie Pusaka, Nafis Sadique, Pi-Hsun Shih, Ian Tullis, Max Ward, and Marten Wiman.

Analysis authors:

- Pack the Slopes: Max Ward.

- Adjacent and Consecutive: Pablo Heiber.

- Hexacoin Jam: Pablo Heiber.

- Musical Cords: Max Ward.

- Replace All: Pablo Heiber.

A. Pack the Slopes

Problem

You are trying to organize a group of skiers. The skiers are taking a trip to a large mountain, which has been rented for the day.

There are N rest points numbered from 1 to N on the mountain, and they are connected by N-1 slopes. Each slope starts at some rest point and goes directly to another rest point, with no intermediate slopes or rest points. A slope can be traversed in only one direction.

Each skier starts at the summit rest point and traverses a slope to reach another rest point. From there, the skier can traverse another slope to reach another rest point, and so on. Once a skier reaches their destination rest point, they stop skiing for the day and head to the ski lodge for hot cocoa. The destination rest point cannot be the summit rest point. However, notice that a skier's destination rest point can be the start of zero or more slopes; that is, a skier does not necessarily have to keep using available slopes until there are none available: they can always walk carefully down the rest of the mountain! For all rest points, there is exactly one sequence of slopes that a skier can use to reach it from the summit rest point.

Each slope can accommodate only a certain total number of skiers in a day, after which the snow gets too choppy to ski. In addition, the ski resort can charge or reward each skier for each slope that they ski on. Each slope may have a different price, and each skier must pay the price for each slope they ski on. A slope's price can be positive, zero, or even negative; a negative price represents a bounty awarded for testing that slope. As the organizer, you pay all the slope prices and collect all the bounties on behalf of your group of skiers. Notice that if multiple skiers use the same slope, you pay that slope's price or collect the slope's bounty multiple times. The sum of all costs you pay minus the sum of all bounties you collect is the total expense for the trip. The expense can be positive, zero, or negative. A negative expense means that you actually made money on the trip!

As the organizer, you want to figure out the maximum number of skiers that you can put on the mountain. Also, you would like to figure out the minimum possible expense for a trip with that maximum number of skiers.

Input

The first line of the input gives the number of test cases, T. T test cases follow. The first line of a test case contains a single integer N: the number of rest points on the mountain.

Each of the final N-1 lines of a test case describes a slope via four integers Ui, Vi, Si, and Ci. These are the slope's starting rest point, the slope's ending rest point, the maximum number of skiers the slope can accommodate, and the slope's price per skier, respectively.

The summit rest point where the skiers start from is always numbered 1.

Output

For each test case, output one line containing Case #x: y z,

where x is the test case number (starting from 1),

y is the maximum number of skiers, and z is the

minimum expense for having y skiers

ski at least one slope each.

Limits

Time limit: 30 seconds per test set.

Memory limit: 1GB.

1 ≤ Ui ≤ N, for all i.

2 ≤ Vi ≤ N, for all i. (No

slope can end at the summit rest point.)

Ui ≠ Vi, for all i.

1 ≤ Si ≤ 105, for all i.

-105 ≤ Ci ≤ 105, for all i.

There is exactly one sequence of slopes that a skier can

use to reach rest point r from the summit rest point, for all r.

Test Set 1 (Visible Verdict)

1 ≤ T ≤ 100.

2 ≤ N ≤ 1000.

Test Set 2 (Hidden Verdict)

T = 17.

2 ≤ N ≤ 105.

Sample

2 4 1 2 2 5 1 3 2 5 3 4 1 -2 7 4 7 2 2 1 3 5 5 1 4 2 -1 3 2 3 -2 3 5 2 -1 3 6 2 2

Case #1: 4 18 Case #2: 7 15

In Sample Case #1, we can send one skier to rest point 4, one skier to rest point 3, and two skiers to rest point 2.

In Sample Case #2, we can send three skiers to rest point 2, two skiers to rest point 5, and two skiers to rest point 4.

Notice that the first slope listed in a test case does not need to start at the summit rest point, and that slopes can have Ui > Vi.

B. Adjacent and Consecutive

Problem

Two players, A and B, are playing a game. The game uses N tiles numbered 1 through N, and a board consisting of a single horizontal row of N empty cells.

Players alternate turns, with Player A going first. On a turn, a player picks an unused tile and an empty cell and places the tile in the cell. At the end of the game, Player A wins if there are two tiles with consecutive numbers in adjacent cells (regardless of who put them there). Otherwise, Player B wins. For example, final boards of 1 2 3 4 and 4 1 3 2 are examples of wins for Player A, and a final board of 3 1 4 2 is an example of a win for Player B. (Notice that consecutive numbers may appear in either order.)

You just watched two players play a game, but you could not understand their strategy. They may not have played rationally! You decide to compare their moves against an optimal strategy.

A winning state is a state of the game from which the player whose turn it is can guarantee a win if they play optimally, regardless of what the opponent does. A mistake is a move made while in a winning state that results in the opponent having a winning state on their next turn. (Notice that it is not possible to make a mistake on the last turn of the game, since if the last turn begins with a winning state for that player, it must be because that player's only move results in a win.)

Given the N moves, count the number of mistakes made by each player.

Input

The first line of the input gives the number of test cases, T. T test cases follow. Each case begins with one line containing an integer N: the number of tiles in the game (which is also the number of turns, and the number of cells on the board).

Then, N more lines follow. The i-th of these (counting starting from 1) has two integers Mi and Ci. Respectively, these represent the tile chosen on the i-th turn, and the index of the cell (counting from 1 at the left end to N at the right end) where that tile is placed.

Note that it is Player A's turn whenever i is odd, and Player B's turn whenever i is even.

Output

For each test case, output one line containing Case #x: a b, where x

is the test case number (starting from 1), a is the total number of mistakes made by

Player A, and b is the total number of mistakes made by Player B.

Limits

Time limit: 40 seconds per test set.

Memory limit: 1GB.

1 ≤ T ≤ 100.

1 ≤ Mi ≤ N, for all i.

Mi ≠ Mj, for all i ≠ j.

1 ≤ Ci ≤ N, for all i.

Ci ≠ Cj, for all i ≠ j.

Test Set 1 (Visible Verdict)

4 ≤ N ≤ 10.

Test Set 2 (Hidden Verdict)

4 ≤ N ≤ 50.

Sample

3 6 2 2 3 5 4 3 6 6 1 4 5 1 4 4 1 1 3 3 4 2 2 4 3 1 2 2 4 3 1 4

Case #1: 2 1 Case #2: 0 0 Case #3: 0 0

Notice that any game always begins in a winning state for Player A. For example, Player A can play tile 2 in cell 2 (i.e. the second cell from the left). No matter what Player B does on their turn, at least one of tiles 1 and 3 will be unused, and at least one of cells 1 and 3 will be empty. Then Player A can play one of those tiles in one of those cells, and this secures a win for Player A regardless of what happens in the rest of the game.

In Sample Case #1, the game plays out as follows:

- _ _ _ _ _ _. This is a winning state for Player A, as explained above.

- Turn 1: Player A plays tile 2 in cell 2.

- _ 2 _ _ _ _. This is not a winning state for Player B, as explained above; Player B cannot guarantee a win, regardless of their remaining choices in the game.

- Turn 2: Player B plays tile 3 in cell 5.

- _ 2 _ _ 3 _. This is a winning state for Player A; for example, they could play tile 1 in cell 3.

- Turn 3: Player A plays tile 4 in cell 3.

- _ 2 4 _ 3 _. This is a winning state for Player B; for example, they could play tile 5 in cell 1, and then they would be guaranteed to win no matter what Player A did. So Player A's last move was a mistake!

- Turn 4: Player B plays tile 6 in cell 6.

- _ 2 4 _ 3 6. This is a winning state for Player A, since Player A could play tile 1 in cell 1. So Player B's last move was a mistake!

- Turn 5: Player A plays tile 1 in cell 4.

- _ 2 4 1 3 6. This is a winning state for Player B, so Player A's last move was a mistake!

- Turn 6: Player B plays tile 5 in cell 1.

- 5 2 4 1 3 6. The game is over, and Player B has won.

In total, Player A made 2 mistakes and Player B made 1 mistake.

In Sample Case #2, although some of the moves may look risky, neither player made a mistake as defined in this problem. Player A never gave up a winning state to Player B, and Player B had no opportunity to make a mistake because they were never in a winning state.

In Sample Case #3, notice that even though the outcome of the game is determined after the second move (since that move creates a pair of adjacent and consecutive tiles), all tiles must be placed in each game. Moreover, although the second move assures Player A's victory, it is not a mistake for Player B because Player B was not in a winning state at the time.

C. Hexacoin Jam

Problem

The Code Jam team's first cryptocurrency, jamcoins, never caught on. This year, we are trying again with hexacoins, which are named for their use of base 16. To "mine" a D-digit hexacoin, one has to work with integers using exactly D base 16 digits, including leading zeroes if needed. Each value represents an integer between 0 and 16D - 1, inclusive. Base 16 digits are represented by the numbers 0 through 9 and the uppercase letters A through F, as usual. For example, F2B, 0C8 and 000 are valid values when D=3, corresponding to the base 10 values 3883, 200 and 0. On the other hand, 1234, DF, C0DE and JAM are not valid values when D=3.

When performing addition of D-digit base 16 values, any overflow digits are dropped. That is, the addition is performed modulo 16D. For example, F2B + 0C8 = FF3 (4083 in base 10) and F2B + F2B = E56 (3670 in base 10, because the sum's result is 7766, and taking modulo 163 yields 3670).

To "mine" a D-digit hexacoin, a computer must perform the following steps:

- Choose a list L of N D-digit base 16 values L1, L2, ..., LN.

- Choose a target range of D-digit base 16 values: the numbers from S to E, inclusive.

- Choose a permutation P of the base 16 digits 0 through F, uniformly at random from among all 16! such permutations.

- Apply P to all digits of all numbers in the list, creating a new list L' consisting of N D-digit base 16 values. Formally, the j-th digit of the i-th element of L' is the result of applying P to the j-th digit of the i-th element of L.

- Choose a pair of elements from L' without replacement, uniformly at random from among all such possible choices, and independently of the choice of permutation.

- Calculate the sum (dropping overflow digits) of the two chosen elements.

If the sum calculated in the last step is between S and E, inclusive, then a hexacoin has been found! For example, suppose that:

- L = [134, 000, FFB, 000, AA9].

- S = 85C and E = EDF.

- The computer happens to choose P = (0 → 4, 1 → A, 2 → 2, 3 → 8, 4 → 9, 5 → B, 6 → C, 7 → 7, 8 → F, 9 → 1, A → 0, B → 3, C → 5, D → 6, E → E, F → D).

Then, when P is applied to L, the resulting L' is [A89, 444, DD3, 444, 001]. Notice that P is not applied to S and E.

There are (5 × 4) / 2 = 10 pairs of values to choose, and each pair has a probability of 1/10 of being chosen. The only sums that fall within the range are A89 + DD3 = 85C, 444 + 444 = 888, A89 + 001 = A8A, DD3 + 001 = DD4, and A89 + 444 = ECD (twice).

The first two steps are already computed and you know the list L and the range [S, E] that were chosen. What is the probability that a hexacoin is found after the rest of the process is performed?

Input

The first line of the input gives the number of test cases, T. T test cases follow. Each test case consists of three lines. The first line contains two integers N and D: the size of the given list and the number of digits to work with, respectively. The second line contains two D-digit base 16 numbers S and E: the inclusive lower and upper bounds of the target range, respectively. Then there is one more line containing N D-digit base 16 numbers L1, L2, ..., LN, representing the values in the list.

Output

For each test case, output one line containing Case #x: y z,

where x is the test case number (starting from 1) and

y and z are non-negative integers, such that the

fraction y/z represents the probability of

finding a hexacoin, under the conditions described above. All of

x, y, and z must be in base 10. If there

are multiple acceptable values for y and z, choose

the ones such that z is minimized.

Limits

Time limit: 90 seconds per test set.

Memory limit: 1GB.

2 ≤ N ≤ 450.

S contains exactly D characters.

Each character of S is a base 16 digit.

E contains exactly D characters.

Each character of E is a base 16 digit.

S ≤ E.

Li contains exactly D characters, for all i.

Each character of Li is a base 16 digit, for all i.

Test Set 1 (Visible Verdict)

1 ≤ T ≤ 100.

2 ≤ D ≤ 3.

Test Set 2 (Hidden Verdict)

1 ≤ T ≤ 100.

2 ≤ D ≤ 4.

Test Set 3 (Hidden Verdict)

1 ≤ T ≤ 10.

2 ≤ D ≤ 5.

Sample

4 2 2 10 10 00 FF 2 2 10 11 00 FF 4 3 FFF FFF 230 A10 010 F70 4 3 AFF FFF 230 A10 010 F70

Case #1: 7 120 Case #2: 1 15 Case #3: 0 1 Case #4: 2731 8736

In Sample Case #1, the target range is just a single value 10. Since the result ends with 0, the sum of the values assigned to both last digits 0 and F must end in 0 as well. Since P[0] and P[F] are different values, their sum cannot be exactly 0. Therefore, P[0] + P[F] must be 10 (in base 16). There are 7 pairs of different digits that accomplish that. P[0] and P[F] cannot both be 8. All 7 pairs lead to an overall sum of 10 (after dropping an overflow 1). Therefore, there are 14 assignments of different digits to 0 and F that lead to a hexacoin. There are 16 × 15 possible assignments to those digits, so the result is 14/240 = 7/120.

In Sample Case #2, we need to add the probability of the result being exactly 11 to the result of Sample Case #1. The only way that happens is if 0 and F are assigned to 0 and 1, in either order. That has a probability of 2/240=1/120, leading to a total of 7/120 + 1/120 = 8/120 = 1/15.

In Sample Case #3, notice that regardless of which permutation and pair of numbers

the computer chooses from the list, we will add two numbers that end in the same digit.

That produces an even result, even after taking it modulo 163. Since the only

value in range is odd, we have no hope of mining a hexacoin in this case. Notice that

0 2 is an invalid representation of the answer because z

would not be minimum.

D. Musical Cords

Problem

Lauren is trying to play the most beautiful notes possible using a harp. The harp is a circle with a radius of R centimeters. To play a note, a cord must be attached to the harp in a way that connects two different attachment points on the perimeter of the circle. Lauren then plucks this cord to play a note.

There are N attachment points on the perimeter of the circular harp at which a cord can be attached. The i-th such attachment point is at a location that is Di nanodegrees (a nanodegree is 10-9 degrees) clockwise around the perimeter of the circular harp, starting from the rightmost point on the perimeter.

Not all attachment points use the same technology to properly fix the cords onto them. The i-th attachment point requires Li centimeters of cord to be used for attaching. A cord fixed between two different attachment points i and j needs to be exactly Li + Lj + distance(i, j) centimeters long. By distance(i, j) we mean the length of the geometric chord connecting the i-th and j-th attachment points, that is, the Euclidean distance between the two points.

Lauren thinks that notes sound better when they come from longer cords. What are the K longest cords that can be used with Lauren's harp?

Input

The first line of the input gives the number of test cases, T. T test cases follow. The first line of a test case contains three integers: N, R and K: the number of attachment points, the radius of the circular harp in centimeters, and the number of lengths of cords that Lauren is interested in knowing.

The next N lines describe the attachment points. The i-th of these lines contains two integers, Di and Li, which describe the position (in number of nanodegrees clockwise from the rightmost point of the harp) and length of cord in centimeters needed at the i-th attachment point.

Output

For each test case, output one line containing Case #x: y1 y2

... yK, where

x is the test case number (starting from 1), and yn is the

n-th value in the list of lengths of all N×(N-1)/2 cords

that can be used in Lauren's harp, sorted in non-increasing order.

Each yn will be considered correct if it is within an absolute or

relative error of 10-9 of the correct answer. See the

FAQ for an

explanation of what that means, and what formats of real numbers we accept.

Limits

Time limit: 120 seconds per test set.

Memory limit: 1GB.

1 ≤ T ≤ 100.

N = 150000 in at most 10 cases.

5 ≤ N ≤ 104 in all cases with N ≠ 150000.

1 ≤ R ≤ 109.

0 ≤ D1.

Di < Di+1, for all i.

DN < 360 × 109.

Test Set 1 (Visible Verdict)

Li is chosen independently and uniformly at random between 1 and

109, inclusive, for each i.

K = 1.

Test Set 2 (Hidden Verdict)

1 ≤ Li ≤ 109, for all i.

(There is no guarantee as to how each Li is generated.)

K = 10.

Sample

2 5 2 1 0 3 1234567890 3 3154510113 3 180000000000 3 359999999999 3 5 10 1 90000000000 8 180000000000 7 260000000000 9 260000000001 1 260000000002 1

Case #1: 10.0000000000 Case #2: 36.9238939618

The above cases meet the limits for Test Set 1. Another sample case that does not meet those limits appears at the end of this section.

Note: the Li values in these sample cases for Test Set 1 were chosen for ease of understanding and were not randomly generated. Your solution will be run against these sample cases and must pass them.

In Sample Case #1, all of the attachment points have the same value, so we should pick the pair connected by the longest chord, which in this case is a horizontal diameter of the circle that has a length of 4 centimeters. So the total length needed is 4 + 3 + 3 = 10 centimeters.

In Sample Case #2, the fourth and fifth points are extremely close to the third point, but have much smaller L values. We can effectively rule them out and focus on the possible connections among the first three points, as follows:

- first and second points: length 10√2 + 8 + 7: ≈29.142136.

- first and third points: length ≈19.923894 + 8 + 9: ≈36.923894.

- second and third points: length ≈12.855726 + 7 + 9: ≈28.855726.

Using the first and third points gives us the greatest total length.

The following additional case could not appear in Test Set 1, but could appear in Test Set 2.

1 6 1 10 0 10 15000000000 1 30000000000 1 45000000000 1 60000000000 1 75000000000 1

The correct output is Case #1: 12.2175228580 12.0000000000 11.7653668647 11.5176380902 11.2610523844 3.0000000000 2.7653668647 2.7653668647 2.5176380902 2.5176380902

Notice that there are three possible pairs of points tied for producing the 9th longest cord. Also, it is fine if lines connecting different pairs of points intersect, since Lauren will only be playing one note at a time.

E. Replace All

Problem

Banana Rocks Inc is coming up with a revolutionary technology to perform the common edit operation "replace all". Their implementation replaces every occurrence of a character within a given text with another character. (If the character does not appear in the text, then the operation occurs but has no effect.)

For example, if the starting text is

CODEJAMWORLDFINALS and an operation is performed to replace A with

O, the new text would be CODEJOMWORLDFINOLS. If another operation

is performed on that result to replace O with Y, the final text

would be CYDEJYMWYRLDFINYLS.

Unfortunately, the implementation is incomplete, so it can only perform replacements from a specific list of N pairs of characters. Also, even if a replacement of a specific character c1 with another character c2 is implemented, the reverse replacement of c2 with c1 may or may not be implemented.

You want to try all the implemented replacements. You are given some initial string S to use as the initial text. You can perform any number of replacements in sequential order: the first replacement is performed on S, and the (i+1)-th replacement is performed on the result of performing the i-th replacement. The only requirement is that each implemented replacement is performed at least once during this process. There is no upper limit on how many times you may perform each replacement.

The allowed characters are decimal digits and uppercase and lowercase English alphabet letters. In this problem, uppercase and lowercase versions of the same letter are treated as distinct characters.

What is the maximum number of unique characters that can appear in a text that is the result of the last replacement performed?

Input

The first line of the input gives the number of test cases, T. T test cases follow. Each test case consists of two lines. The first line contains a string S and an integer N: the initial text and the number of implemented replacements. The second line contains N two-character strings R1, R2, ..., RN, representing the implemented replacements. Ai and Bi are the first and second characters of Ri, respectively. The i-th implemented replacement corresponds to replacing all occurrences of Ai with Bi.

Output

For each test case, output one line containing Case #x: y, where x

is the test case number (starting from 1) and y is the maximum number of

unique characters that can appear in a text that is the result of performing all implemented

replacements to S one or more times each, in some order.

Limits

Time limit: 60 seconds per test set.

Memory limit: 1GB.

1 ≤ T ≤ 100.

2 ≤ length of S ≤ 1000, for all i.

Each character of S is an uppercase or lowercase English alphabet letter or

a decimal digit.

Ai is an uppercase or lowercase English alphabet letter or

a decimal digit, for all i.

Bi is an uppercase or lowercase English alphabet letter or

a decimal digit, for all i.

Ai ≠ Bi, for all i.

(Ai, Bi) ≠ (Aj, Bj), for all i ≠ j. (Each replacement is unique.)

Test Set 1 (Visible Verdict)

2 ≤ N ≤ 62.

Bi ≠ Bj, for all i ≠ j.

Test Set 2 (Hidden Verdict)

2 ≤ N ≤ 62 × 61.

Sample

4 CODEJAMWORLDFINALS 2 AO OY xyz 3 xy zx yz CJ 4 20 2O HC KS AB 2 Ab bA

Case #1: 14 Case #2: 2 Case #3: 2 Case #4: 2

The above cases meet the limits for Test Set 1. Another sample case that does not meet those limits appears at the end of this section.

Sample Case #1 is the one in the statement. Notice that if we perform the replacements in the

order mentioned in the statement, we get 13 different characters in the final text. If we perform

them both once in the other order, however, we can get CYDEJOMWYRLDFINOLS, which

has 14 different characters.

In Sample Case #2, one way to get 2 different characters in the final text is to perform the replacements in the order given from left to right, once each.

In Sample Case #3, none of the replacements affect the text at all, so it does not matter how we apply them. We will always be left with the original two letters. Notice that replacements can contain characters not appearing in the initial text, and the initial text can contain characters not appearing in the implemented replacements.

In Sample Case #4, remember that uppercase B is not the same character as

lowercase b.

The following additional case could not appear in Test Set 1, but could appear in Test Set 2.

1 1234 5 12 2X X3 31 X2

The correct output is Case #1: 4.

In this additional sample case, one possibility is to perform the replacements in the

following order: X3 2X X2 2X 12 31. This process goes through the

following strings, starting with S: 1234 1234 1X34 1234 1X34 2X34 2X14.

Analysis — A. Pack the Slopes

Test Set 1

A translation of the problem statement is that we wish to find the minimum cost maximum flow on a directed tree. The rest points can be represented by nodes, and the ski slopes can be represented by edges. A commonly used algorithm to solve minimum cost maximum flow problems is successive shortest paths. In the case of our directed tree, a simplified successive shortest paths approach can be applied.

Observe that we can add skiers greedily. Of all the nodes that can be reached from the top of the mountain (the root of the tree), we should always send a skier to a node with a minimum cost path using only edges that still have capacity remaining. Since we are working with a tree, there is only one path from the root to each node. With this in mind, we can find the path costs to all the nodes using any tree traversal from the root while calculating the cost to each node as the cost of its parent (which we calculated first) plus the cost of the edge connecting them. Then, we can sort the nodes by their path cost in ascending order. We should always use the first node in the list that still has some capacity along its path.

Let us maintain the number of skiers sent through each edge. We can work through our sorted list of nodes in order. We check if the current node in the list still has some capacity by checking the edges in the path, and either update the capacities if we can push another skier, or move on to the next node in the list if we cannot. Doing this for a single skier takes O(N) time. We know we will keep using the same path until some edge reaches capacity, so we can compute the minimum capacity left in the path with a linear pass over it, and simulate sending that many skiers at once. Each such a step takes O(N) time overall, and since we have to try up to O(N) possible destinations, the overall algorithm takes O(N2) time, which is efficient enough to solve Test Set 1.

Test Set 2

For the large input, we need to do more. We can use a heavy light decomposition of the tree to manage the remaining capacities of the edges in the tree. The decomposition will need to support updates and queries on paths from the root to an internal node. The updates involve subtracting an amount from the capacity of each edge. The queries involve finding the minimum capacity of all the edges on a path.

A heavy light decomposition breaks a tree up into a collection of disjoint paths such that the number of decomposition paths from any node to the root is at most O(log N). If we keep a segment tree for each decomposition path, then we can support both the query and update operations in O(log2 N). Note that the segment tree we use must support lazy range updates. This improves the running time of each step of the algorithm for Test Set 2 to take only O(log2 N) time instead of O(N) time. This makes the algorithm run in O(N log2 N) time in total, which is sufficient to solve the Test Set 2.

Analysis — B. Adjacent and Consecutive

As is the case in many problems about games, this problem revolves around evaluating a given state of the game and deciding whether it is winning or losing. The problem defines mistakes as transitions from winning to losing states, so once we have determined the type of each state, we can easily count up the mistakes.

Test Set 1

In all zero-sum finite two person games with no ties, the basic framework to decide whether a state is winning is the same. Let us call a state A-winning (or equivalently, B-losing) if player A can ensure a win from it, or B-winning/A-losing otherwise. For a given state, if the game is over, check the winning condition. Otherwise, if it is player X's turn, try every possible play. If any of them leads to an X-winning state, then the current state is also X-winning. Otherwise, the current state is X-losing. We can implement this as a recursive function, but given that there can be O(N2) possible plays on any given turn, this will only work quickly enough for small values of N.

We can see immediately that there can be a lot of repeated work in that recursive evaluation. For example, consider a state S and another state S' such that S' is the result of making P plays on S. Since there are P! ways to make those plays, state S' potentially needs to be evaluated P! times to evaluate S once. Memoizing the function or turning it into a dynamic programming implementation would thus save a lot of recursive calls.

In addition, there are many states that can be evaluated without the need for a recursive call. Some simple cases include states in which there already are two adjacent and consecutive tiles placed (the "already won" condition for player A). We can prune the recursive calls tree by simply returning those states as A-winning. We can prune it further: if there is a play that creates such a pair during A's turn (the "can win immediately" condition), we just do that instead of trying all possible plays for A. We can also restrict B to only try plays that do not leave a known A-winning condition. There are ways to prune the tree further, some of which we explore in the Test Set 2 section below.

We can pass Test Set 1 either with a combination of simple pruning and memoization/dynamic programming, or a lot of pruning.

Test Set 2

The number of possible states in Test Set 2 is just too big to even fit in memory, even after careful pruning. However, pruning enough can help us find sets of equivalent states, that is, groups of states that we can prove have the same winner. By doing that, we can dramatically reduce the number of memoized states.

Consider only A's turns first. We have already mentioned the "already won" and "can win immediately" conditions above. On top of those, we can notice that if there is some set of three unplayed tiles available with three consecutive numbers, and there is some block of three consecutive cells available, A can win by playing the middle number in the middle cell (we call this the "can win in 2 moves" condition). Whatever B plays next, A's following turn will fulfill either the "already won" condition or the "can win immediately" condition.

The conditions above allow us to find some states which are definitely A-winning. Notice that in any other state where it is A's turn, it's impossible for any of the already-placed tiles to end the game as part of an adjacent and consecutive pair. Therefore, they could be replaced by "unavailable" cells without a specific number on them. So, the remaining cells can be represented as the multiset of sizes of the groups of consecutive cells that are left; call it LC. The exact locations of those groups on the board are not relevant to the final outcome. Since each tile can only form adjacent and consecutive pairs with other leftover tiles, we can similarly represent the remaining tiles with a multiset of lengths of consecutive runs of tiles; call it LT. For example, the state

7 2 6 _ _ 3 _ 4 _ _ 5

has the leftover tiles 1, 8, 9, 10, 11, so its leftover cells can be represented by the multiset LC = {1, 2, 2} and its leftover tiles by the multiset LT = {1, 4}.

In addition, since the "can win in 2 moves" condition has already been checked, we know that at least one of LC and LT does not contain any integer greater than or equal to 3. To simplify the algorithm, notice that the game in which we swap tile numbers and cell numbers is equivalent (because the winning condition is symmetric). Therefore, states with LC and LT swapped are equivalent. We can therefore assume it is always LC that has only integers 1 and 2.

After this compression, the number of states is dramatically reduced. Notice that because we know that there are no three consecutive leftover numbers, at least N/3 numbers have been played already. That means that the sum of the integers in both LC and LT (which is the same for both multisets) is at most 2×N/3. Therefore, the total number of possible multisets LT under those conditions is bounded by the sum of partitions(K) for every K between 0 and 2×N/3, which is partitions(2×N/3+1). Given such an LT multiset, the number of possible multisets LC of only 1s and 2s such that the sum of LC and LT is the same is bounded by N/3 (which is the maximum number of 2s). So, the number of states is no greater than (N/3)×partitions(2×N/3+1), which is a fairly small number for the given limits for N. Moreover, we need only one memoization or dynamic programming table for all test cases.

There are multiple sufficiently fast ways to implement the second player's turns (which are the slowest ones), and there are further restrictions that can be placed on plays to optimize our strategy even more. Some of those options result in an algorithm whose complexity makes it clear that it runs within the time limit. Some other options have a theoretical complexity that is either too large or hard to estimate tightly enough to be convinced it's fast enough. Fortunately, it's possible to compute the recursive function for all possible states without reading any data, and be sure that it runs in time before submitting.

The Test Set 3 that wasn't

We considered having a third test set for this problem requiring a polynomial solution. We progressively found solutions taking O(N3) time, O(N2) time and even one requiring only O(N log N) time. We ultimately decided against adding the test set because the possibility of solving it by guessing the right theorem without proof was a significant concern. The benefit of the extra challenge without making contestants have to read a full extra statement is of course a significant benefit, but it was dampened by our estimation that the likelihood of it being solved legitimately was small. If we had made it worth a small number of points, it would not have been worth the relative effort to solve it. If we had made it worth a lot, though, that would have diminished the value of the work needed for our favorite part of the problem, which is solving Test Set 2.

If you are up to try an extra challenge without spoilers, stop here. If you want some hints on how those solutions go, read ahead!

The first theorem further compresses the states considered in the Test Set 2 solution on A's turns. Consider a state that is not under the "already won", "can win immediately" or "can win in 2 moves" conditions represented by some multisets LC and LT, where only LT can contain integers greater than 2. That state is A-winning if and only if the state represented by multisets LC and L'T is A-winning, where L'T is obtained from LT by replacing each integer X in LT with floor(X / 2) copies of a 2 and a 1 if X is odd. This reduces the number of states of the dynamic programming to O(N3), and we can process each of those states in constant time because there is only a bounded number of effectively different plays to make.

If we pursue that line of reasoning further, we can find that we can check A-winningness with a small number of cases. Let LCi be the number of times i is in LC, and LTi be the number of times i is in LT. Because we already assumed that LC had no integers greater than 2, LCi = 0 for all i ≥ 3. Let K = LC1+2×LC2 = sum of i×LTi over all i be the number of turns left and Z = (LT2+LT3) + 2×(LT4+LT5) + 3×(LT6+LT7) + ... be the number of times 2 appears in L'T as described in the previous paragraph. Then, the second theorem we can prove is that:

- If K = 2, then the state is A-winning if and only if LC2 = LT2 = 1.

- If K > 2, then the state is A-winning if and only if K is odd and 2×(LC2+Z) > K.

From the need to avoid the "already won", "can win immediately" and "can win in 2 moves" conditions, it is possible to reduce the number of plays on B's turn to a set of a bounded number of options that always contains a winning move if there is one. This, together with the fact that the conditions above can be checked in constant time, yields an algorithm that decides for any state whether it is winning or losing in O(N) time, making the overall process of a test case take O(N2) time. With some technical effort, we can represent the board in a way that we can update and use in O(log N) time to check all the necessary conditions, yielding an algorithm that requires only O(N log N) time to process a full test case.

The proofs for the theorems on the paragraphs above are left as an exercise to the reader. As a hint, it is easier to prove the second more general theorem, which is already nicely framed, and then obtain the first theorem as a corollary.

Analysis — C. Hexacoin Jam

The main task in this problem is to count how many ways there are to pick a permutation and a pair of numbers from the list such that their (possibly truncated) sum falls within the given interval. This is the numerator of a fraction representing the desired probability. The number of total picks (our denominator) is simply 16! × N × (N - 1) / 2. Then, we can use a known algorithm (like dividing numerator and denominator by the greatest common divisor) to reduce the fraction to the desired form. In the rest of the analysis, we focus only on calculating the non-reduced numerator.

One common denominator among all solutions for all test sets is that the actual values of the digits in the list do not matter. Once we fix a pair of numbers X and Y from the list, only the "digit structure" of the pair (X, Y) matters. The digit structure of a pair of numbers is a unique way to see their coupling as digits. We define it as the lexicographically smallest pair (P[X], P[Y]) across all possible digit permutations P, where P[X] is the result of replacing each digit of X with the value assigned to it by P. Notice that digit structures have length 2D.

The total number of digit structures grows rapidly when D increases, but depends only on D, which has small limits. The total number is 15 for D=2, 203 for D=3, 4140 for D=4, and 115975 for D=5.

Test Set 1

In Test Set 1, the number of different digit structures is really small. In addition, for a fixed structure, we only care about the values assigned by the chosen permutation to up to 2D digits. For each digit structure with d unique digits, we can compute the value of the truncated sum for a valid assignment of those d digits, noting that there are (16 - d)! ways to generate each assignment.

The number of valid assignments can be somewhat large at 16! / 10! or about 6 million for 6 different digits, but there is only one digit structure within Test Set 1 that has that many different digits. There are a handful that have 5, for which there are around half a million assignments each, and most structures have 4 or fewer different digits, which have fewer than 50 thousand different assignments each. Moreover, this computation only depends on D and not on the rest of the input, so we have to do it only once for each possible structure for D=2 and D=3.

Given the precomputation above, we can iterate over the pairs of integers from the list, compute their digit structures, and use two binary searches to see how many of the numbers in the list are in range. Then, we compute the number of different digits in the structure d and multiply the total by (16 - d)!, which gives us the number of sums in range that can be produced for the given pair. Summing that result over all possible pairs gives us the answer we need.

Test Set 2

The precomputation above can be slow in Test Set 2. Not only do we have 4140 additional digit structures to process, but most importantly, a few of those have 7 and 8 unique digits. Each additional digit means an order of magnitude extra possible assignments. There are several ways to handle this, and we need only a few of the tricks below to make it work.

The first issue is that the lists we need to store are now too long to fit in memory. Since there are only up to 164 different results, many of those results would be repeated, so we can compress them based on those repetitions. This is still tough to pull off, so the best thing to do is to just not store the full list. We know we only care about how many items on the list are in range for up to T different ranges. So, we can read all cases before starting the computation, split the full range of sum results at every A and B that we read into up to 2T+1 minimal ranges, and then compress together all numbers that are within the same range. This definitely fits in memory.

We can also choose to not memoize between different test cases, and treat them one at a time. If we do that, we have a fixed A and B, so there is no need for lists, just a counter. We can either treat each pair of numbers individually as well (speeding up the process of assignments — see below) or try to memoize digit structures if any are repeated within the same test case. It is possible that almost every pair has a different digit structure, of course, but there are few digit structures with the maximum number of unique digits. This means the memoization reduces the total runtime in what was our previously worst case, and the new worst case (all different structures) is not as bad because many of those structures will have fewer different digits.

We can reduce the number of digit structures further by realizing that digit structures like (011, 022) and (012, 021) are equivalent, in the sense that for any assignment, their sum is the same: both are 11 × (P[2] + P[3]) + 100 × P[0], where 11 and 100 are in base 16. This only works in conjunction with some form of memoization.

Once we have either a range [A, B] or a small list of ranges fixed at the moment of processing the assignments, we can use it to do some pruning. Suppose we assign values to the digits in most significant positions first. When we have done a partial assignment, we can compute or estimate a range of possible values that the sum may have when we finish. As more highly significant digits get assigned, that range shrinks. If that range is completely inside our target range (or one of our target ranges) we can stop and know that all further assignments work, counting them using a simple multiplication. If the range is completely outside of the target range (or all target ranges), we can also stop and count nothing further.

As mentioned above, we need only some of the optimizations above to manage to pass Test Set 2. Of course, the more of them we find and implement, the more confident we can be about the speed of our solution.

Test Set 3

To simplify the problem, we can use a common technique when dealing with counting numbers in closed intervals [A, B]. We write a function f(U) that only calculates the value for closed intervals [0, U-1]. Then, the result for an interval [A, B] is f(B + 1) - f(A). In this case, we can use this to take care of the overflow as well, by simply ignoring the overflow and counting the number of hits in the interval [A, B] plus the number of hits in the interval [16D + A, 16D + B], which are the only possible sums whose truncation would yield a result in [A, B]. After we write our function f, this translates to a result of f(B + 1) + f(16D + B + 1) - f(A) - f(16D + A). We focus now on calculating f(U), that is, the number of picks of a permutation and a pair of numbers that yield a (non-truncated) sum of strictly less than U.

Both the number of possible values a sum can have and the possible number of pairs are small (for computers). We can use an asymmetric "meet in the middle" approach to take advantage of both those facts. We do this in a similar way as what we did for Test Set 1 and possibly for Test Set 2, by keeping a count on each digit structure, and then iterating over the pairs of numbers from the list to see how much we have to use each of those counts.

First, let's consider all the ways to add up to something less than U. Let us fix the first number to have a value of x, so the second number can have any value less than U-x. A number y is less than U-x if and only if it has a smaller digit than U-x at the first position at which they differ. We can represent this set of ys by saying they are all the ys that start with the first i digits of U-x, then continue with a digit d smaller than the (i+1)-th digit of U-x, and any remaining digits can be any digit.

For example, if U=2345 and x=1122, then U-x=1223 and y can be of the form 0***, 10**, 11**, 120*, 121*, 1220, 1221, 1222, where * represents any digit.

For each pair of an x and a prefix for y, we can represent all the pairs of numbers from the list that match by matching that with a digit structure that allows for *s at the end. In this way, a pair of numbers from the list X and Y can be mapped to x and y by a permutation if they have the same digit structure, disregarding the actual digit values. To represent this, we normalize the digit structure as we did before: no digit appears for the first time before another smaller digit. Therefore, a structure like x=1122 and y=10** is represented as x=0011 and y=02**. As before, the number of permutations that can match a pair of numbers to this digit structure is (16 - d)!, where d is the number of unique digits that appear in the structure.

Notice that different values of x can yield the same structure. For example, x=1133 yields U-x=1212, and for y=10** the structure is the same as for x=1122 and y=10**.

For each digit structure of 2D total digits that have between 0 and D-1 asterisks at the right end, we count the number of permutations that make its parts add up to something less than U.

We now process the pairs of numbers from the list. For each pair, we build its normalized digit structure as before, and add the count for that digit structure to our running total. We also add the count of the structures that result in replacing up to D-1 rightmost digits with *s.

We can express the complexity of this algorithm in terms of the base B, the number of digits of each number D, and N, the size of the list of numbers. The first stage iterates through up to O(BD) possible values for x, and for each one, considers up to O(B×D) digit structures for y. If factorials up to B are precomputed, and we store the results in a hash table or an array with a clever hashing for digit structures that keeps hashes unique and small, this requires only constant time per pair, so O(BD+1×D) time overall. The second stage requires processing up to O(D) digit structures per pair, so it takes O(N2×D) time in total. The sum of both stages gives us the overall time to compute f, which is O(BD+1×D + N2×D). Since we need only a constant number of calls to f, that is also the overall time complexity of our algorithm.

Another solution is to to use all of our Test Set 2 tricks in an efficient way. Consider especially the last one: when considering the pair of i-th most significant digits, only the pairs whose sum is close to either that value at A or that value at B produce a range of possible sums for the pair that is neither fully inside [A, B] nor fully outside of it. That means that for the i-th digits, there are only a linear number of pairs of digits that can be assigned at that position that would make the process not stop immediately, instead of a quadratic number. This shrinks the number of assignments to approximately the square root of its previous value, behaving more as exponential on D than as an exponential on 2D. This gives us a time complexity comparable to that of the other solution presented above. While the overall time complexity is still higher, these two solutions can perform pretty similarly for the limits we have: the bound on the number of assignments is actually smaller than BD because it behaves more like B! / (B - D)!. Those savings, plus those from not needing extra D terms, make up for the fact that the backtracking solution's time complexity has a term with behavior similar to BD and a term with behavior similar to N2 multiplied together instead of added.

Notice that the basic insights of both solutions are closely related. The pruning provides such a large speedup for the same reason that we can use the "meet in the middle" approach based on digit structures.

Analysis — D. Musical Cords

Test Set 1

In this problem, we are given a circle, a collection of points around the perimeter of the circle, and the attachment values for each of those points (Li). We want the top K pairs of points that maximizes the Euclidean distance between the points plus their attachment values. For Test Set 1, we know that the point values are picked randomly. We will need to use this property somehow. We also know that K=1, so we are looking for the top pair of points.

Imagine considering the points around the circle in the clockwise order. Let us say that we are at a point p. Also, without loss of generality, let us only consider points that are at most 180 degrees clockwise from p. That is, we only consider half of the circle starting at p. If we find the maximum pairing (maximum distance plus attachment values) for every possible p, the maximum over all of those pairs will be the answer. This is because considering each point, (and points that are up to 180 degrees clockwise from it) will consider every possible pair of points at least once.

So, we are considering all points p in the clockwise order around the circle and trying to find the maximum pairing for each of these. If we consider the possible other points we could pair with p in the counterclockwise order starting from the location 180 degrees clockwise of p (the "other side" of the circle from p), then we can observe some useful properties. Once we have considered some pairing point x, then some further counterclockwise point y will be closer to p. Thus, for y to be the maximum pairing, its attachment value must be greater than x's attachment value. So, we only need to consider points that increase in their attachment values.

We know that the attachment values are randomly selected. In a sequence of N integers randomly selected from a uniform range, we expect the running maximum to change only log N times. To see why, observe that first element has a 1/1 chance of being the new maximum, the second element has a 1/2 chance, the third element has a 1/3 chance, and so on. This is the harmonic series. The sum of its first N terms is bounded by O(log N). Thus, we expect to see only O(log N) changes of maximum. This means the explained solution has an expected time complexity of O(N log N), which should be fast enough to pass. The worst case among all possible inputs takes O(N2) time. However, as we showed, this is extremely unlikely with random attachment values.

Now, when we are considering a point x, we need an efficient way to find the first point in the counterclockwise order from x whose attachment value is greater than x. This is actually equivalent to a known problem: the next greater element problem. The twist is that our values are on a circle, so we have wraparound. One way to deal with this is to make an array of attachment values, append it to itself, and then apply a fast algorithm for the next greater element problem to the resulting array.

There are other approaches that also take O(N2) time in the worst case, because they might compare a high percentage of all pairs, but use other clever speedups like jumping to the next greater value, to have a high chance to cut the number of comparisons way down.

Test Set 2

The attachment values of points are not randomly generated in this test set, so we will need to find a different approach. Also, K=10, so we also need to find more than one pair of points. Instead of trying to find the top K pairs directly, we can find the best pairing for each point, and extend this solution to find the top K pairs overall. Let's first figure out how to find the best pairing for each point, then explain how to extend it to find the top K pairs.

We start by finding, for each input point P, which other input point Q is its best pairing. That is, we find for which other input point Q the function LP + LQ + distance(P, Q) is maximized.

Now consider points P not necessarily in the input. We define a value function VP(Q) = distance(P, Q) + LQ. Notice that if P is a point in the input, VP(Q) + LP is the amount of cord required to connect P and Q.

Let us approach the problem visually. Imagine moving P around the circle in the clockwise order and computing the value function VP(Q) for all the input points Q that are up to 180 degrees clockwise of P. As we move P, how does the distance function to some other point change?

The Euclidean distance part of the distance function is equivalent to computing the length of a circular chord. That is, VP(Q)=2×R×sin(angle(P, Q)/2)+LQ. Notice here than the angle function is in the clockwise direction, and it's always less than or equal to 180 degrees. Consider how this function changes when P changes. That is, consider the function WQ(P)=VP(Q). As the domain for VP is the Qs that are up to 180 degrees in the clockwise direction, the domain for WQ is the Ps that are up to 180 degrees in the counterclockwise direction.

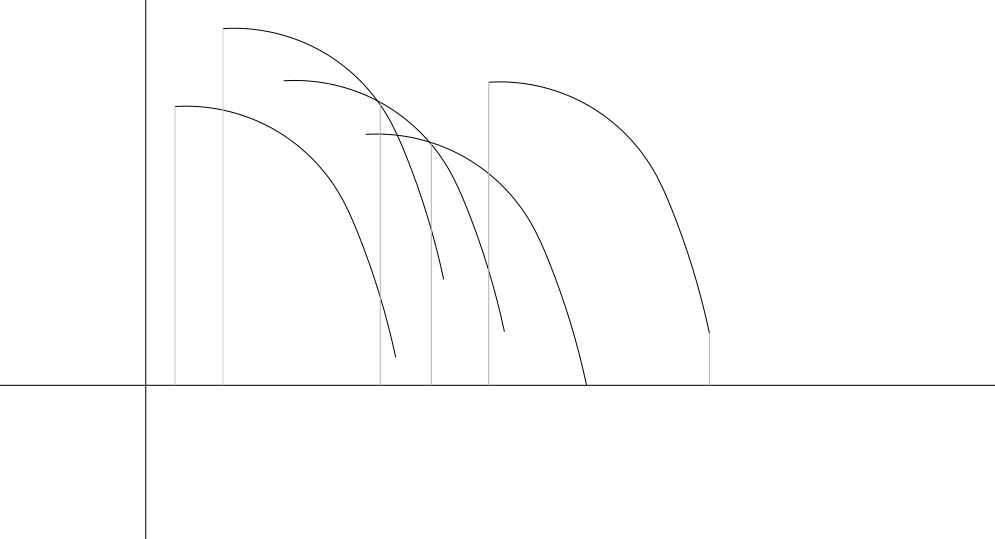

For each Q, WQ has a constant term LQ, and then a term that looks like half a sine wave. Notice that the graph of the function is the same for any Q, but translated: the change in angle(P, Q) translates it horizontally, and the change in LQ translates it vertically. An example of how these graphs might look follows. Each black curve corresponds to WQ for a different point Q.

Because all of the distance functions have the same shape, we can see that WQ has maximum values compared to all other W functions for a continuous range of points P. So, can we efficiently compute these ranges for each point? If so, we can use these ranges to find the maximum for each possible point by finding which maximum's range it falls into.

We can analyze this graph by sweeping across it from left to right—that is, increasingly along the x-axis. Since the x-axis represents points on a circle, it may be easier to visualize if we instead represent each point by its angle with respect to the positive half of the x-axis, as usual. For a fixed angle A, let us consider only the curves whose domain includes at least one point in the range [0, A] and call that set CA. As we consider increasing values for A, at some point we consider a curve for the first time, with domain [A, B]. The curve is definitely maximum among all curves in CA at B, because it is the only curve at that point. So, the range for which it is the maximum must end there. Since we know there is only one such range, we can binary search to find the beginning of the range.

We keep a sorted list of non-overlapping ranges as we go, and add new ranges to the end. Note that we may need to pop off some ranges from the end of the list if we find a new range that covers them completely, or shorten them if the new range covers them only partially. There can be zero or more left-most ranges where the new curve is fully under the maximum curve in that range, zero or more right-most ranges where the new curve is fully over the maximum curve in that range, and zero or exactly one range where the new curve and the curve from the range cross. We binary search within the range for the exact crossing point.

One issue we still need to deal with is that the graph is actually cyclic. It represents the distance function to points as we go around a circle. So, the distance functions we plot can "wrap around". We can deal with this via a method similar to what we used in Test Set 1: append a copy of the input to itself, and go around the input twice.

The final list of ranges can be used to get the maximum pairing for each point on the circle. For each input point P, we find the Q for which WQ is the maximum at P. Since we only need to check input points P, we can restrict the domains of all functions and intervals discussed so far to input points without affecting the correctness. This allows us to binary search over an array of O(N) values as opposed to over a range of real numbers.

The time complexity of constructing the list of ranges is therefore O(N log N). Then, we go through the list iterating over the list of possible Ps for each Q. While a particular Q can have a long range, overall, we iterate over each input P at most once (or twice for the duplicated input). This makes the final step take linear time.

An O(N) algorithm for the above problem does exist. This is left as an exercise for the reader.

We have a way to find the best pairing for each point. How do we extend this solution to find the top K unordered pairings? It is possible that a pair in the top K is not in our list of best pairings for each point. However, we can still use our list. Consider sorting the list by the Euclidean distance plus attachment values of the pair in descending order. The first entry in the list will definitely be the top pair overall. The second top pair will either be the next entry in our list, or it will be paired with one of the points in our top pair. We can look at all pairings involving the points in our top pair, and now we know the second best pair. We can repeat this process to find the top K. In each step, the pair we choose is either the next best pair in our list that we haven't seen, or it is a pairing with a point in the previous pairs in our list. In each of these K steps, we take O(N) time. Sorting our list initially takes O(N log N) time, so our algorithm requires O(N log N + N × K) time overall, which is fast enough to pass.

Analysis — E. Replace All

The first thing we can notice is that since we replace every occurrence of a character, and at the end we count unique characters, we can regard the input S as a set. That is, multiple occurrences of the same character can be ignored, and the order of the characters is unimportant. We write "x → y" to represent the replacement of a character x by a character y. Here, and in the rest of the analysis, we use both lowercase and uppercase letters to represent variables, not actual letters that can happen in the input.

To simplify the explanations, we can represent the list of replacements as a directed graph G where nodes represent characters, and there is an edge from x to y if and only if there exists a replacement x → y in the input. Notice that each weakly connected component of G defines a problem that can be solved independently, and combining the results corresponds to adding up the number of distinct characters that can occur in the final text from each group. In particular, notice that a character c that does not appear in any replacement forms a single-node connected component, and the result for each such character is either 1 if c is in S, and 0 otherwise.

Let us define diversity as the number of unique characters in a text. Diversity of the last text is exactly what we are trying to maximize. If both x and y are in U, the diversity after performing x → y on U is 1 less than before. On the other hand, if at least one of x and y is not in U, then the diversity before and after performing x → y is the same. That is, the replacement can either cause a diversity loss of 1, or not cause any loss. We can then work on minimizing the diversity loss, and the final answer will be the original diversity minus the loss.

Test Set 1

In Test Set 1 the in-degree of each node of G is limited to at most 1. This restricts the types of weakly connected components the graph can have. If there is a node in the component with in-degree equal to 0, then the component is a directed tree and the node with in-degree equal to 0 is the root. If all nodes in the component have in-degree equal to 1, then there is a cycle (which we could find by starting at any node and following edges backwards until we see a node for the second time). Each node in the cycle only has its incoming edge coming from another node in the cycle, but it can have edges pointing out to non-cycle nodes. This means each cycle-node can be the root of a directed tree. These are the connected components of pseudoforests.

We can break down this test set by component type. Let's consider the simplest possible tree first: a simple path c1 → c2 → ... → ck. If both ck-1 and ck are in the initial text S, then we cannot perform ck-1 → ck without losing diversity. No other replacement in the text can make ck-1 or ck disappear from the text, so they will both be present until ck-1 → ck is performed for the first time. In addition, after we perform ci-1 → ci for any i, we can immediately perform ci-2 → ci-1 without loss of diversity, since ci-1 will definitely not be in the text then. Therefore, performing every replacement in the path in reverse order works optimally.

With some care, we can extend this to any tree. Consider a leaf node y that is maximally far from the root. The replacement x → y that goes into it is in a similar position as the last replacement in a path, but there could be other replacements that remove x from the text before performing x → y, which would prevent x → y from causing diversity loss. Since y is maximally far from the root, however, all other replacements that can help also lead to leaf nodes. If x and the right side of all replacements that go out of x are in S, there is no way to avoid losing diversity. However, only the first one we perform will cause diversity loss, whereas all the others can be done when x is no longer in the text, preventing further loss. We can generalize this by noticing that we can "push" characters down the tree without loss of diversity as long as there is at least one descendant node that is not in the current text. This is done by considering the path from a node x to the descendant that is not in the text y, and processing the path between x and y as described earlier.

From the previous paragraph, we can see that any edge x → y that does not go into a leaf node can be used without diversity loss. Even if there are no descendants of x in the tree that are not in the initial text S, we can process some unsalvageable leaf node first, and then we will have room to save x → y. Any edge z → w that goes into leaf nodes may be salvageable as well, as long as z has other descendants. Putting it all together, we can process the nodes in reverse topological order. At the time when we process a node x, if there is any descendant of x that is not in the current text, then we can push x down and then process all of x's outgoing edges without any diversity loss. If we process a node x and its subtree consists only of nodes representing letters currently in the text, that means that we will lose 1 diversity with the first edge going out of x that we process (it doesn't matter which one we process first), but that loss is unpreventable.

To handle cycles, consider first a component that is just a simple cycle. If there is at least one node representing a character x not in S, then we can process it similarly to paths, without diversity loss: we replace y → x, then z → y, etc, effectively shifting which characters appear on the text, but keeping diversity constant. If all characters in the cycle are in S, then the first replacement we perform will definitely lose diversity, and after that we can prevent diversity loss by using the character that disappeared as x in the process from the first case.

If a component is a cycle with one or more trees hanging from it, we can first process each tree independently. Then, each of those trees will have at least one node representing a character not in the current text: either there was such a node from the start, or it was created as part of processing the tree. So, we can push down the root of one of those trees (which is a node in the cycle) without losing diversity. Then, there will be at least one node in the cycle whose represented character is not in the text, so we can process it without diversity loss.

Test Set 2

In Test Set 2, the graph G can be anything, so we cannot do a component type case analysis like we did for Test Set 1. However, we can still make use of the idea of processing paths and pushing characters to avoid diversity loss following our first replacement.

We tackle this by morphing the problem into equivalent problems. We start with the problem of finding an order of the edges of G that uses each edge at least once while minimizing steps in which we cause diversity loss.

First, notice that once we perform x → y, we can immediately perform any other x → z without diversity loss. So, if we have a procedure that uses at least one replacement of the form x → y for each x for which there is at least one, then we can insert the remaining replacements without altering the result. That is, instead of considering having to use every edge at least once, we can simply find a way to use every node that has out-degree at least 1. Furthermore, any replacement x → y such that x is not in S can be performed at the beginning without changing anything, so we do not need to worry about using them. Notice that we are only removing the requirement of using particular edges, not the possibility. Therefore, we can make the requirements even less strict: now we only need to use every node with out-degree at least 1 that represents a character present in S.

Now that we have a problem in which we want to cover nodes, and we know from our work in Test Set 1 that we can process simple subgraphs like paths and cycles in a way that limits diversity loss, we can define a generalized version of the minimum path cover problem that is equivalent.

Given a list of paths L, let us define the weight of L as the number of paths in L minus the number of characters c not in S such that at least one path in L ends with c. That is, for each character c not in S, we can include just one path ending in c in L without adding to its weight. Let us say that a list of paths L covers G if every node in G with out-degree at least 1 that represents a character in S is present in some path in L in a place other than at the end. That is, at least one edge going out of the node was used in a path in L. We claim that the minimum weight of a cover of G is equal to the minimum number of steps that cause diversity loss in the relaxed problem.

To prove the equivalency, we first prove that if we have a valid solution to the problem that causes D diversity loss, we can find a cover that has weight at most D. Afterwards we prove that if we have a cover with weight D, we can find a solution to the problem that causes a diversity loss of at most D.

Let r1, r2, ..., rn be an ordered list of replacements that satisfies the relaxed conditions of the problems and has D steps that cause diversity loss. We build a list of paths L iteratively, starting with the empty list. When considering replacement ri = x → y:

- If the text before and after ri are the same, we do not change L.

- If there is a path starting with y in L, we append x at the start of it.

- Otherwise, we add ri as a new path in L.

Notice that the first time we perform a replacement x → y where x is a node that we need to cover, we cannot be in case 1: the xs that were originally in S have not been replaced yet, so ri will replace them and change the text. Cases 2 and 3 add x as a non-final part of a path. Further processing can only append things to the start of the path, so x will remain a non-final member of it. This proves that L is a cover.

Now, suppose step ri = x → y falls into case 3 and adds a path to L that increases its weight. Because we are not in case 1, x is in the text at the time we begin to perform ri. Since we are in case 3, there wasn't a path in L starting with y. That means that for any previous replacement of the form y → z that erased ys from the text, there was another replacement of the form w → y that that reintroduced y and caused it to no longer be a starting element of the path in L in which y → z was added. Moreover, since we are assuming this step increases L's weight, either y was in S or there is a path in L ending with v → y, which introduced y into the text. In either case, y is in the text before performing ri, meaning that ri causes diversity loss. Since every step that adds weight to L is a step that causes diversity loss, the weight of L is at most D.

Now let us prove that given a cover L of weight D, we can find a solution to the problem with diversity loss D. For that, we need to process paths in a manner that is not as simple as the one we used for Test Set 1. The way in which we process paths in Test Set 1 could only result in diversity loss on the first replacement made, but it also produced other changes in the text. Those changes could hurt us now that we have to process multiple paths within the same connected component.

To process a path P, we consider the status of the text U right before we start. We call a node strictly internal to a path if it is a node in the path that is neither the first nor the last node in the path. We split P into ordered subpaths P1, P2, ..., Pn such that the last node of Pi is the same as the first node of Pi+1 (the edges in the subpaths are a partition of the edges in P). We split in such a way that a strictly internal node of P is also a strictly internal node of the Pi where it lands if and only if the character it represents is in U. Then, we process the subpaths in reverse order Pn, then Pn-1, ..., then P1. Within a subpath, we perform the replacements in the path's order (unlike in Test Set 1). Since the intermediate characters within a subpath do not appear in S, performing all the replacements of a subpath that starts with x and ends with y has the net effect of replacing x by y. In the case of P1, if x is not in U, then there is no effect. After processing subpath Pi+1 that starts with xi+1, xi+1 is not in U, and after processing subpath Pi, xi is not in U and xi+1 is restored. The net effect is that processing the path in this way effectively replaces the first character of the path that is in U by the last character of the path, and doesn't change any other character.

Let L' be a sublist of L consisting of exactly one path that ends in c for each character c not in S. L - L' contains exactly D paths. We process the paths in L' first, and then the ones in L - L'. When we process a path in L', we change the text by introducing a new character to it. However, that character is not in the original S, and it is always a new one, so those changes cause no diversity loss. When processing all other paths, since the net effect is that of a single replacement, we can cause at most 1 diversity loss each time, which means at most D diversity loss overall.

Notice that if we have a path that touches a node x that represents a character in S, we can replace that node in the path with a cycle that starts and ends at x and visits its entire strongly connected component. A replacement like this one on a path in a list does not change its weight. Therefore, we can relax the condition to require covering just one node of out-degree at least 1 and with a represented character in S per strongly connected component.

We can turn this into a maximum matching problem on a bipartite graph similarly to how we solve the minimum path cover problem in directed acyclic graphs. Consider a matching M between the set of strongly connected components C (restricted to nodes representing characters in S) that need to be covered, and the set D equal to C and all remaining nodes representing characters not in S. An element c from C can be matched to an element d from D if there is a non-empty path from c to d (it cannot be matched with itself). This matching represents a "next" relationship assignment, and unmatched elements represent "ending" nodes in paths. More formally, let f(c) be the function that maps a member of C to the member of D that corresponds to the same strongly connected component. We can create a cover with weight exactly the size of the unmatched elements from C by creating a path that adds weight for each unmatched element from C, and adding paths that do not add weight for each element in D - C.

For each unmatched element c from C, add it to a new path, then append to its left its matched element M(f(c)), then M(f(M(f(c)))), etc. Then, for each matched element c in D - C, which are the characters not in S, do the same. This yields a set of paths that touches every element of C, and the ending elements are either unmatched elements from C or characters not in S which do not add weight. Notice that some unmatched elements could yield single node paths, which are paths not really considered above. However, since such a path always counts toward the weight, we can append any replacement to its end to make it not empty without increasing the weight.

Therefore, the size of the unmatched elements from C in a maximum matching of the defined relation, which we can calculate efficiently by adapting a maximum flow algorithm like Ford-Fulkerson, is equal to the minimum weight of a cover, which is what we needed to calculate.

Despite the long proofs, the algorithm is relatively straightforward to implement. The graph can be built in time linear in the size of the input, while the strongly connected components and their transitive closure, which are required to build the relation for the matching, can be found in quadratic time in the size of the alphabet A (the relation itself can have size quadratic in A, so it cannot be done faster). The maximum matching itself takes time cubic in A, because it needs to find up to A augmenting paths, each of which can take up to O(A2) time (linear in the size of the relation graph). This leads to an overall complexity of O(A3), which is fast enough for the small alphabet size of this problem. Moreover, we could use something simpler and technically slower for the strongly connected components and their transitive closure like Floyd-Warshall, simplifying the algorithm without affecting the overall running time.